Introduction

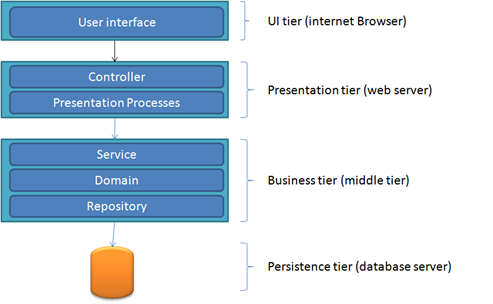

This post continues from this previous post. In this post I will be creating a ASP.NET MVC application using the architecture described in the previous post. I will start by laying out the requirements, driving out the behaviour through tests, implement Unity and create the layers for the UI, controller and Presentation Processes.

Since writing my previous post, I have came across a blog which contains some really good tips / best practices for ASP.NET MVC, which I recommend you read.

http://weblogs.asp.net/rashid/archive/2009/04/01/asp-net-mvc-best-practices-part-1.aspx

http://weblogs.asp.net/rashid/archive/2009/04/03/asp-net-mvc-best-practices-part-2.aspx

Requirements

So to start, we need requirements as development should be driven by requirements. In business analyst language, this is the requirement that i will be working against.

- As a user when browsing the customer feature within the application I expect to see a list of customers, each customer will contain the following information:

- Customer name

- Account number

- Account Manager

- City

- Country

Where to start

ASP.NET MVC is focused around controllers and actions. So its a natural place to start describing how you implement this architecture. On a day to day basic, I write tests first that drive out the behaviour. As my application grows, my layers become cemented. The name of classes denote the responsibility and context.

Creating the Project

I am starting with a new “Asp.Net MVC web application” project called “Web” and a test project called “Web.Specs”. My references are:

Web application

Test Project

- NUnit – “nunit.framework.dll”.

- NBehave (using NSpec only) – “NBehave.Spec.NUnit.dll”.

- Rhino Mocks – “Rhino.Mock.dll”.

- MVCContrib – “MvcContrib.TestHelper.dll” and “MvcContrib.dll”.

Test first

Here is the test for the requirement defined above, putting the controller under test.

using System.Collections.Generic;

using System.Web.Mvc;

using MvcContrib.TestHelper;

using NBehave.Spec.NUnit;

using NUnit.Framework;

using Rhino.Mocks;

using Web.Controllers;

using Web.PresentationProcesses.Customers;

namespace Web.Specs

{

[TestFixture]

public class Browsing_a_list_of_customers

{

private CustomerController controller;

private ICustomerAgent customerAgent;

[SetUp]

public void Setup()

{

customerAgent = MockRepository.GenerateStub<ICustomerAgent>();

controller = new TestControllerBuilder()

.CreateController<CustomerController>(new object[] { customerAgent });

}

[Test]

public void Should_pass_a_list_of_customers_to_the_view()

{

var customers = new List<Customer>();

customerAgent.Expect(x => x.GetCustomerList()).Return(customers);

ViewResult result = controller.List();

result.ShouldNotBeNull();

result.AssertViewRendered().ForView(CustomerController.ListViewName);

customerAgent.AssertWasCalled(x => x.GetCustomerList());

}

}

}

This test has driven out the “CustomerController”, an interface called “ICustomerAgent” and two objects called “Customer” and “CustomerListViewModel”.

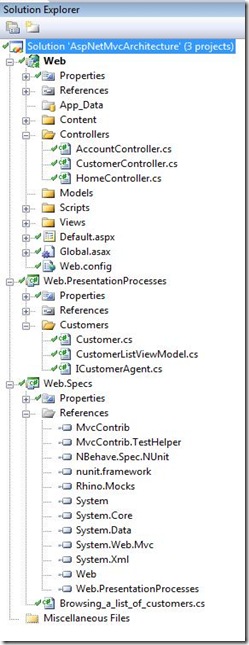

I have created another class library called “Web.PresentationProcesses” which doesn’t have any additional references. I have placed the Customer, CustomerListViewModel and the ICustomerAgent interface under a new folder called “Customers” in the Web.PresentationProcesses assembly.

The code created so far is:

//Customer.cs

namespace Web.PresentationProcesses.Customers

{

public class Customer

{

public string Name { get; set; }

public string AccountNumber { get; set; }

public string AccountManagerName { get; set; }

public string City { get; set; }

public string Country { get; set;}

}

}

//CustomerListViewModel.cs

using System.Collections.Generic;

namespace Web.PresentationProcesses.Customers

{

public class CustomerListViewModel

{

public List<Customer> Customers { get; set; }

}

}

//ICustomerAgent.cs

using System.Collections.Generic;

namespace Web.PresentationProcesses.Customers

{

public interface ICustomerAgent

{

List<Customer> GetCustomerList();

}

}

//CustomerController.cs

using System.Web.Mvc;

using Web.PresentationProcesses.Customers;

namespace Web.Controllers

{

public class CustomerController : Controller

{

private readonly ICustomerAgent customerAgent;

public const string ListViewName = "List";

public CustomerController(ICustomerAgent customerAgent)

{

this.customerAgent = customerAgent;

}

public ViewResult List()

{

var viewModel = new CustomerListViewModel

{

Customers = customerAgent.GetCustomerList()

};

return View(ListViewName, viewModel);

}

}

}

At this point the solution looks like this:

At the moment, the unit test will pass but if you run the application it will be broken because we don’t have a view and the customer controller doesn’t have a default constructor.

Creating the View

- Create a new folder called “Customer” inside the “Views” folder

- In the newly created folder, create a strongly typed view called “List” using the type “CustomerListViewModel”.

- Open up the site.master view. In the “menucontainer/menu” div, add the following under the link to the “Home/About” page.

<li><%= Html.ActionLink("List", "List", "Customer")%></li>

- Add the following to the List view.

<%@ Page Title="" Language="C#" MasterPageFile="~/Views/Shared/Site.Master" Inherits="System.Web.Mvc.ViewPage<Web.PresentationProcesses.Customers.CustomerListViewModel>" %>

<%@ Import Namespace="MvcContrib" %>

<asp:Content ID="Content1" ContentPlaceHolderID="head" runat="server">

<title>Customer List</title>

</asp:Content>

<asp:Content ID="Content2" ContentPlaceHolderID="MainContent" runat="server">

<h2>Customer List</h2>

<table cellpadding="1" cellspacing="0" border="1">

<thead>

<tr>

<th>Name:</th>

<th>Account Number:</th>

<th>Account Manager:</th>

<th>City:</th>

<th>Country:</th>

</tr>

</thead>

<tbody>

<% Model.Customers.ForEach(customer => { %>

<tr>

<td><%= customer.Name %></td>

<td><%= customer.AccountNumber %></td>

<td><%= customer.AccountManagerName %></td>

<td><%= customer.City %></td>

<td><%= customer.Country%></td>

</tr>

<% }); %>

</tbody>

</table>

</asp:Content>

The next stage is to have an IOC container resolve the dependencies for as at runtime.

Implementing Inversion of Control

Inversion of control / dependency injection has become very popular over the last couple of years and is a great practice to use. Their are a quite a few containers on the market at the moment, all are very good and apart from the common functionality that they all share. Some of the containers have unique qualities. I have mainly use Castle Windsor, Unity and Ninject. My personal favourite is Ninject because it has a simple fluent interface and contextual binding. At work we are using Unity mainly because its from Microsoft, but we do have applications that use Castle Windsor and Spring.net. I find that once you know one container its really ease to use another. Some of my fellow developers and I do experiment with different containers, although it doesn’t take long to swop them, using the Common Service Locator will make thing easier.

But before i start registering types, I common anti pattern that i have seen is that the container is defined in the web application being at the top of layer stack and then references all of the layers below it so it can add the types to the container. The solution to this is to pass via interface the container reference to each layer and allow a each layer to register its types.

- Starting from the top in the web application, new up the container and register the types that are in the web application only like the controllers.

- Then from the web application, pass the container reference to the PresentationProcesses layer via an interface. The PresentationProcesses assembly will expose a module object that accepts the container reference.

- The module in the PresentationProcesses layer will register its types and then could pass the container reference to the layer below it. If you are using WCF services to expose your service layer then the chain stops here. If your application doesn’t have external services and runs in-process then this pattern continues going down the layer stack.

Some IOC containers allow you to register types in config, code or both. Although i don’t like config anyway, using config to register types can cause problems. Typically when you are refactoring code, like renaming or moving types into other namespaces, that you miss out changing the config files. This results in exceptions at runtime.

I am going to stick with using Unity here and I am going to reference the unity assemblies and use MVCContrib unity assembly:

- Microsoft.Practices.Unity.dll

- Microsoft.Practices.ObjectBuilder2.dll

- MvcContrib.Unity.dll

The best place to get the container created and configured is from within Application object in the Global.asax. If you have used MonoRail and Castle Windsor together then this has the same usage pattern.

using System.Web;

using System.Web.Mvc;

using System.Web.Routing;

using Microsoft.Practices.Unity;

using MvcContrib.Unity;

namespace Web

{

public class MvcApplication : HttpApplication, IUnityContainerAccessor

{

private static UnityContainer container;

public static IUnityContainer Container

{

get { return container; }

}

IUnityContainer IUnityContainerAccessor.Container

{

get { return Container; }

}

protected void Application_Start()

{

RegisterRoutes(RouteTable.Routes);

ConfigureContainer();

}

public static void RegisterRoutes(RouteCollection routes)

{

routes.IgnoreRoute("{resource}.axd/{*pathInfo}");

routes.MapRoute("Default", "{controller}/{action}/{id}", new { controller = "Home", action = "Index", id = "" });

}

private void ConfigureContainer()

{

if (container == null)

{

container = new UnityContainer();

new PresentationProcesses.PresentationProcessesModule().Configure(container);

ControllerBuilder.Current.SetControllerFactory(typeof(UnityControllerFactory));

}

}

}

}

Creating the Agent

The next step to create a concrete class that implements the ICustomerAgent interface called “CustomerAgent”. We didn’t need to make it do any at the moment as we are still trying to get the application working at runtime. Plus when we do start making the “CustomerAgent” do something we will drive it from a test first. We will create this class next to where the interface lives.

using System;

using System.Collections.Generic;

namespace Web.PresentationProcesses.Customers

{

public class CustomerAgent : ICustomerAgent

{

public List<Customer> GetCustomerList()

{

throw new NotImplementedException();

}

}

}

I have created another class assembly called “Web.Container.Interfaces” which contains just one interface called “IModule” which looks like this.

using Microsoft.Practices.Unity;

namespace Web.Container.Interfaces

{

public interface IModule

{

void Configure(IUnityContainer container);

}

}

This class assembly only references Unity and will be referenced by other assemblies like “PresentationProcesses”.

Now to create the PresentationProcessesModule.

using Microsoft.Practices.Unity;

using Web.Container.Interfaces;

using Web.PresentationProcesses.Customers;

namespace Web.PresentationProcesses

{

public class PresentationProcessesModule : IModule

{

public void Configure(IUnityContainer container)

{

container.RegisterType<ICustomerAgent, CustomerAgent>();

}

}

}

Now to run the app, and now when you click the “list” link on the home page, you should get an “The method or operation is not implemented.” exception page, which is expected at this time. What this does prove it that the IoC is working correctly.

The next stage in this process would be drive out getting some real data from somewhere and will get returned from the CustomerAgent. Which is going to be in my follow on post, but for now we can simply new up a collection with some new’d up customer objects as shown below.

using System.Collections.Generic;

namespace Web.PresentationProcesses.Customers

{

public class CustomerAgent : ICustomerAgent

{

public List<Customer> GetCustomerList()

{

return new List<Customer>

{

new Customer

{

Name = "company1",

AccountNumber = "12345",

AccountManagerName = "mr account manager1",

City = "Some Town",

Country = "England"

},

new Customer

{

Name = "company2",

AccountNumber = "54321",

AccountManagerName = "mr account manager2",

City = "Some other place",

Country = "England"

},

};

}

}

}

Run the app

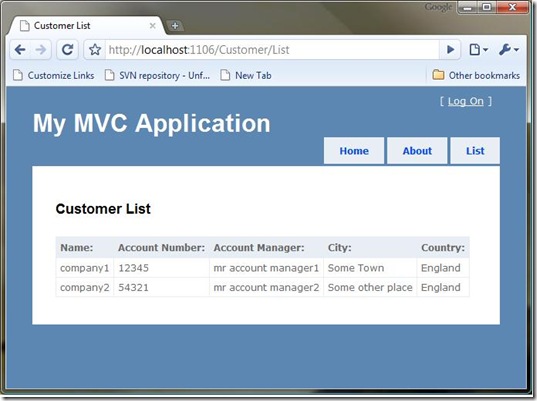

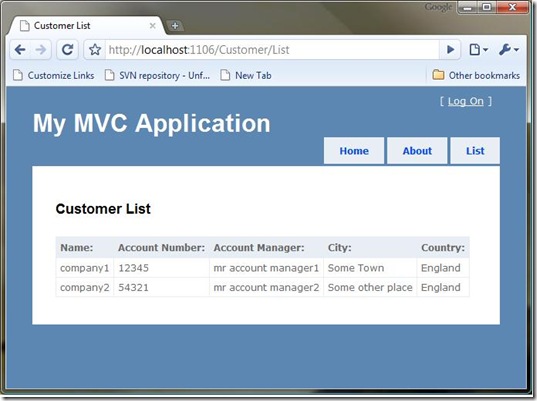

Now when you run the application, click on the “list” link in the menu. You should now get this following page.

Moving on

What we have got is an ASP.NET MVC that has unity in place to resolve types at runtime. We have types (customer and customer ViewModel) defined in the presentation processes layer that the view are bound to. The customer agent returns instances of these types.

The next steps will be to change the Customer agent to get the data from somewhere. This will be driven out via tests. This is going to be the focus on my next post.